前一段时间,在NVDLA上针对MNIST、CIFAR-10、IMAGENET2012这三个数据集上,训练了lenet5、resnet-18两个网络,并在NVDLA的vp环境下编译,运行,相关的模型可以在下文下载。

而NVDLA的文档描述里写到其是支持8bit的,但是如何将浮点模型在nvdla上量化成8bit并运行官方给出的说明很少,本文记述的内容有模型量化的方法、以及修复了官方代码中的一些问题。

Caffe 预训练的网路模型

caffe_lenet_mnis resnet18-cifar10-caffe resnet18-imagenet-caffe

NVDLA量化 在Github的仓库里,有给到量化的大体方向:LowPrecision.md

文中指出,我们需要使用TensorRT来进行模型的量化,并且最后得到一个calibration table。而如何使用TensorRT进行量化的内容可以参考TensorRT的INT8量化方法,简单的说就是需要求一个浮点数到定点数缩放因子,而这个求法的算法我们可以仅作了解,这里给出一个知乎链接:https://zhuanlan.zhihu.com/p/58182172

具体的步骤如下:

使用TensorRT生成Calibration Table 我推荐使用官方的docker镜像

1 docker pull nvcr.io/nvidia/tensorrt:20.12-py3

其实原本我以为,单为了生成calibration table文件装个TensorRT会不会太麻烦,首先尝试使用了圈圈虫的项目:https://github.com/BUG1989/caffe-int8-convert-tools 但遗憾的是不work。

./nvdla_compiler其实读取的并不是生成的calibration table文件(这是一个text文件),而是一个json文件,转换的脚本是calib_txt_to_json.py ,而该脚本并不支持虫叔的仓库生成的calibration table文件,仅支持tensorRT

经过实践发现、虫叔那一套方案没有TensorRT的高级,TensorRT量化的时候需要从验证集中选择一组数据来做辅助矫正,更高级,也可以得出矫正过后的精度,及量化之后的模型的精度如何,是很有用的。

最新版的TensorRT做int8的量化是支持Python API的,我们可以在/opt/tensorrt/samples/python/int8_caffe_mnist里找到官方的量化方法,当然与之对应的还有C++版本。我更推荐Python、可读性更好、重写起来更方便,而且C++其实也是调用的.so的库。

如果我们想要重构一份自己的网络量化方法,核心的部分是要自己定义一个Class、继承trt.IInt8EntropyCalibrator2,具体内容可以阅读MNIST量化的calibrator.py文件,我们需要提供自己训练的时候使用的数据集,deploy.prototxt,caffemodel这三个文件,然后自己编写解析的方法,例如本文对针对ImageNet的resnet18网络做量化,就写成这样:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 def load_data (filepath ):global fileList3 , 224 , 224 ], dtype=np.float )0 ,:,:] = 104 1 ,:,:] = 117 2 ,:,:] = 123 for img_path in fileList:'/' + img_path224 , 224 ])2 , 0 , 1 ))return np.ascontiguousarray(test_imgs).astype(np.float32)def load_labels (filepath ):global fileListwith open (filepath, 'r' ) as f:for each in lines:'\n' ).split(' ' )int (labels)for each in fileList:return np.ascontiguousarray(test_labels)

而自己定义的EntropyCalibrator如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 class CustomEntropyCalibrator (trt.IInt8EntropyCalibrator2):def __init__ (self, training_data, cache_file, batch_size=64 ):0 0 ].nbytes * self.batch_size)def get_batch_size (self ):return self.batch_sizedef get_batch (self, names ):if self.current_index + self.batch_size > self.data.shape[0 ]:return None int (self.current_index / self.batch_size)if current_batch % 10 == 0 :print ("Calibrating batch {:}, containing {:} images" .format (current_batch, self.batch_size))return [self.device_input]def read_calibration_cache (self ):if os.path.exists(self.cache_file):with open (self.cache_file, "rb" ) as f:return f.read()def write_calibration_cache (self, cache ):with open (self.cache_file, "wb" ) as f:

这四个方法都会在build_engine阶段自动调用,需要特别注意的是get_batch方法,在获取数据的时候需要使用np.ascontiguousarray展平,否则会导致失真严重。

运行程序之后的输出:

1 2 3 4 5 6 7 root@ea6ecad42f26:/opt/tensorrt# python samples/python/int8_caffe_cifar10/sample.py

而在运行目录下生成的:cache文件就是calibration table了:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 TRT-7202-EntropyCalibration2

里面有很多的Unnamed Layer :blonde_woman: 我们之后再解决。

Calibration Table转JSON 而compiler需要接受的文件是json格式的,我们利用脚本转换(注意此时的环境不在刚才的tensorrt的docker containner中,切换到nvdla/vp环境下):

1 2 3 4 cd /usr/local/nvdla

我们就可以拿这个json文件做compiler的calibtable参数了,但因为之前生成的代码中有许多的Unnamed

Layer,会导致有很多层找不到自己的scale是多少的错误,这个bug在2020年6月就有人提出来了::https://github.com/nvdla/sw/issues/201

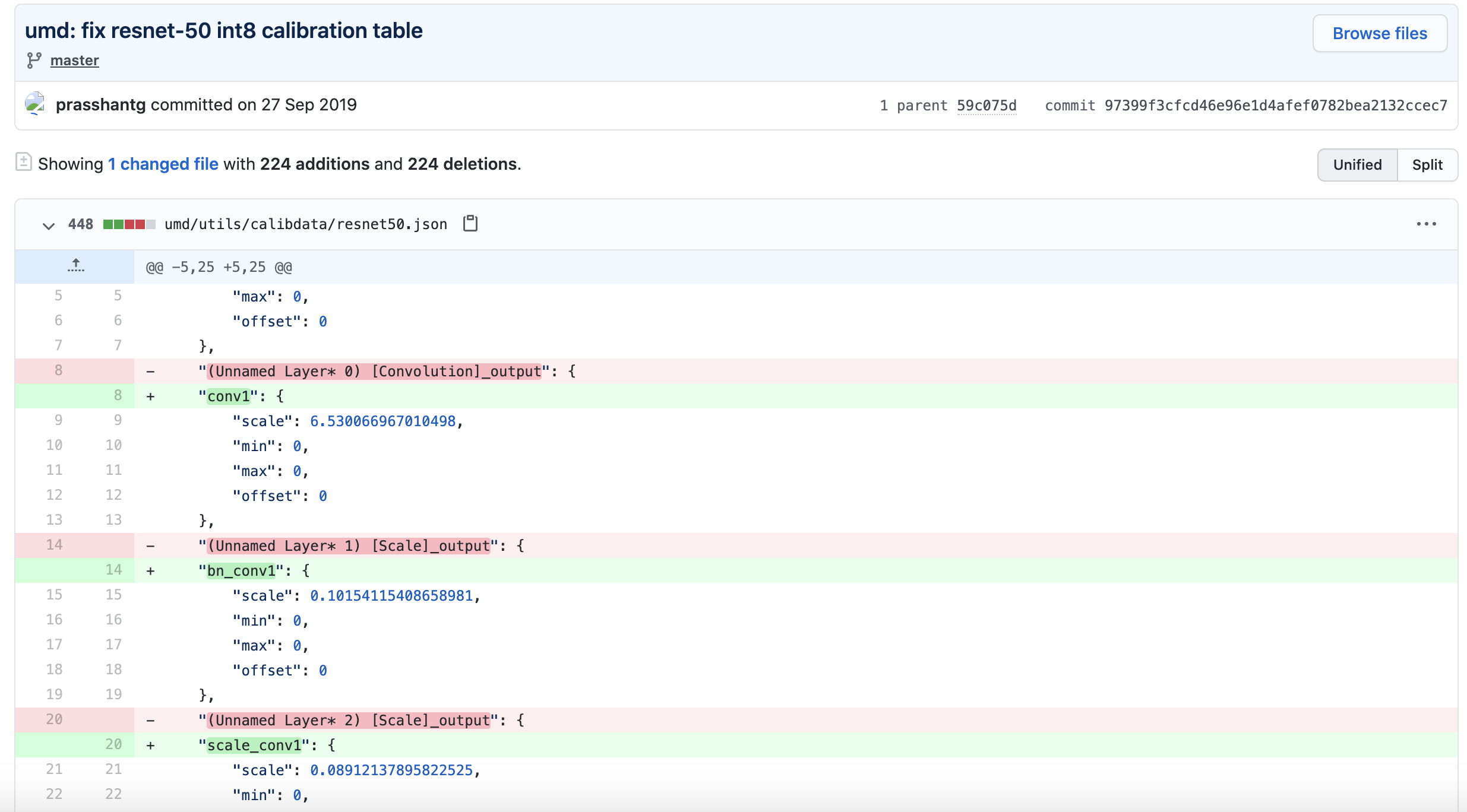

但至今楼下都没给出解决方案,NVDLA原本的维护团队应该已经放弃治疗了( 我比对了一下官方提供的resnet50.json的History

原来官方曾经也有这样的问题。但他这个解决方法也没公布,我发现其其实就是把scale的name换成了每个网络节点的name,于是自己写了一份python脚本解决(本来想在原先的项目上提个pr,但方便解析prototxt需要安装额外的库所以就放下了:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 import json

如这段脚本,生成的文件是imagenet_resnet18_calibration.cache ,注意运行这段脚本需要caffe环境,并且编译出了pycaffe接口。

Compile and Runtime 针对fp16的compile:

1 ./nvdla_compiler --prototxt resnet18-imagenet-caffe/deploy.prototxt --caffemodel resnet18-imagenet-caffe/resnet-18.caffemodel --profile imagenet-fp16

针对fp16的runtime:

1 ./nvdla_runtime --loadable imagenet-fp16.nvdla --image resnet18-imagenet-caffe/resized/0_tench.jpg --mean 104,117,123 --rawdump

1 2 # cat output.dimg

看的太累,可以直接看top5:

1 2 3 4 5 6 cat output.dimg | sed "s#\ #\n#g" | cat -n | sort -gr -k2,2 | head -5

int8的编译

1 2 3 4 5 6 7 # compile per-kernel

int8的runtime

1 127 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Comments